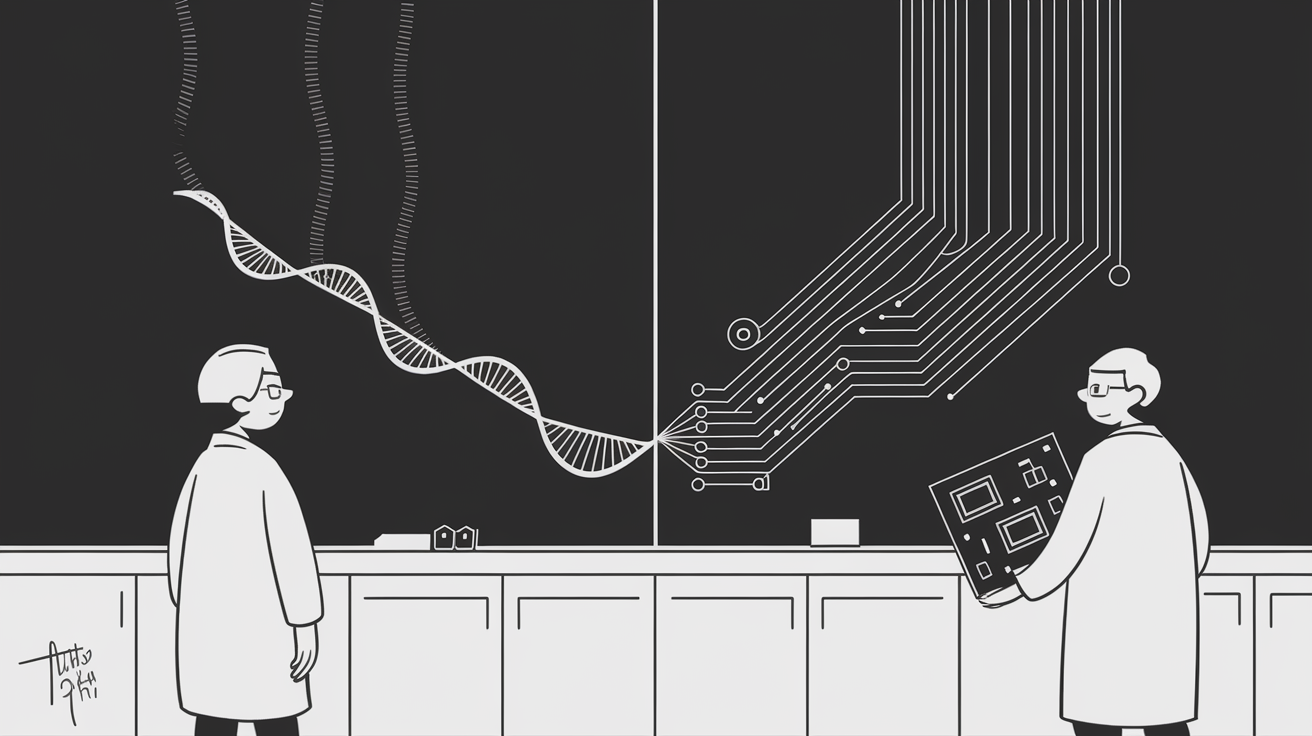

LLM Model Weights as the DNA of AI

Large Language Models (LLMs) have weights that encode the knowledge the model has learned from data. This role is often likened to DNA in living organisms - a static code carrying information that can generate complex outcomes. At first glance this analogy seems metaphorical, but there are deep conceptual and technical parallels. Both DNA and LLM weights serve as compressed repositories of vast information, both are optimized through an iterative search process (evolution or training), both are expressive codes that generate complex structures or behaviors, and both can undergo mutations or fine-tuning leading to adaptation. This essay explores these analogies rigorously, drawing on research from genetics, information theory, neuroscience, and AI. By examining how DNA and model weights store and use information, how they are optimized, and how they adapt, we can appreciate the fundamental similarities between biological evolution and machine learning.

1. Compression of Knowledge

DNA as Compressed Information: DNA is nature's data storage medium, encoding the instructions for building and maintaining an organism in a remarkably compact form. The human genome consists of roughly 3 billion base pairs - about 6 billion bits of information, which is around 700 MB if stored as raw data (Nakamura Research Group Home Page). This relatively small "file" encodes the blueprint for a human being, compressing an immense amount of evolutionary knowledge. In Shannon entropy terms, if each DNA base were equally likely, it would carry 2 bits of information. Real genomes are not completely random, but their entropy is still high - conventional analyses estimate around 1.9 bits of information per nucleotide on average (Significantly lower entropy estimates for natural DNA sequences - PubMed). This means the genome's sequence is densely packed with information; there is little redundancy, much like a highly efficient compression of life's experience. Biologically, this compressed code stores the results of billions of years of evolution, encoding solutions to survival (for example, the instructions to build eyes, immune systems, etc.) in a minimal representation. DNA's storage efficiency is so great that it far outstrips our human-engineered media: researchers note that just 4 grams of DNA could theoretically store all the world's annual digital data, with a storage density about 1000 times higher than silicon chips (DNA as a digital information storage device: hope or hype? - PMC). This highlights how DNA exemplifies maximal information storage in minimal space, due both to its chemical encoding (each base is only two bits) and the evolutionary pressure to keep genomes efficient.

LLM Weights as Compressed Information: Similarly, the learned weights of a large AI model serve as a compressed repository of the training data's knowledge. An LLM like GPT-3 is trained on trillions of tokens of text from the internet (Entropy Law: The Story Behind Data Compression and LLM Performance), yet all the patterns, facts, and linguistic structures from that massive corpus end up distilled into a finite set of parameters. For example, GPT-3 has 175 billion parameters, which (at 16 bits/weight) is on the order of 350 GB of data - orders of magnitude smaller than the terabytes of text it was trained on. In essence, the training process squeezes the essence of the data into the weight values. Indeed, researchers describe generative model training as a form of data compression: the training algorithm plays the role of a compression algorithm, and the model's weights are "the compressed version of the training set." In this view, the act of inference (using the model to generate output) is then a decompression process, with the input prompt serving as a key to decode relevant parts of the stored knowledge (Training Foundation Models as Data Compression: On Information, Model Weights and Copyright Law). This is not just a loose analogy; it is supported by evidence. A well-trained LLM can reproduce portions of its training data (for instance, memorized quotes or code snippets), indicating that specific information from the data is indeed encoded in the weights (Training Foundation Models as Data Compression: On Information, Model Weights and Copyright Law). From an information-theoretic standpoint, the model weights capture the statistical structure of the data (word co-occurrences, grammatical rules, facts, etc.) in a highly efficient manner. Many weights will encode general patterns rather than verbatim data, which is a form of lossy compression optimized for generalization. Despite this, the encoding is still quite dense. Compression studies have shown that LLM weights contain redundancy - for example, you can often quantize weights to 8-bit or 4-bit precision with minimal performance loss (A Comprehensive Evaluation of Quantization Strategies for Large Language Models). This is analogous to the non-coding or redundant portions of DNA which can be altered without losing functionality. In both cases, the system balances high information density with just enough redundancy or error-tolerance to be robust. Overall, both DNA and LLM weights function as compact knowledge stores: DNA compresses the information needed to build and adapt an organism, and model weights compress the knowledge needed to generate correct and coherent outputs. Each is like a library encoded in code - nucleotides or numbers - with astonishing efficiency.

2. Optimization via Evolution vs. Learning

The reason DNA and LLM weights contain such rich, structured information is that they are the product of iterative optimization processes that discover and encode knowledge. In nature, the optimization algorithm is Darwinian evolution by natural selection, and in machine learning, it is gradient descent with backpropagation. While these processes differ in mechanism, they share deep similarities in how they gradually improve a solution and capture useful structure.

Natural Selection as an Optimizer: Evolution can be viewed as a blind but powerful search algorithm exploring the space of possible genomes. In each generation, random mutations and recombination introduce variations in DNA sequences. The environment then acts as a fitness function, selecting which organisms survive and reproduce. Over many generations, beneficial mutations (those that improve fitness) tend to accumulate in the population, while deleterious ones are weeded out. This iterative cycle - variation and selection repeated over millennia - optimizes organisms for their environment. In effect, natural selection "learns" the structure of the environment and encodes it in DNA. The genome gradually becomes a better and better fit to the challenges faced by the species (finding food, avoiding predators, reproducing, etc.). We can quantify this in information-theoretic terms: as a population adapts, the genome distribution shifts away from randomness. One study notes that "selection accumulates information in the genome", measurable as the Kullback-Leibler divergence between the evolved gene distribution and a neutral (random) distribution (Accumulation and maintenance of information in evolution - PubMed). In other words, the genome gains information about what gene combinations are favorable. This is directly analogous to reducing error or surprise - much as a machine learning model reduces its loss. Biologists have even described natural selection as a hill-climbing process on a "fitness landscape", where a population "moves" towards peaks of higher fitness. In fact, there is a precise sense in which natural selection is an optimization algorithm searching for better solutions (gradient descent | Fewer Lacunae). Evolutionary algorithms in AI exploit this by mimicking mutation and selection to evolve neural network weights, confirming that selection can indeed optimize complex high-dimensional systems (gradient descent | Fewer Lacunae). The key point is that evolution is not truly random; it is an iterative, cumulative process that discovers structure (such as the design of an eye or wing) and locks it into the DNA over time.

Gradient Descent as an Optimizer: In training an LLM, we also perform an iterative search, but in a far more directed way. Starting from random initial weights (analogous to random genetic variation), the training algorithm uses gradient descent to continuously adjust the weights to minimize a loss function. Each training example provides feedback - if the model's output (prediction) is wrong, the weights are nudged in the direction that would make the output closer to correct. This is akin to selection pressure guiding changes, except here the "pressure" is an explicit mathematical gradient telling us how to improve the model's performance. Over millions of training steps, the weights settle into a configuration that yields low prediction error on the training data. Like evolution, this process finds structure: for example, the model discovers syntactic rules, semantic relationships, and factual correlations present in the data, and encodes them in the weight values. Gradient descent is much more efficient than natural selection in that it uses direct feedback (the gradient) to update all weights in a coordinated way, rather than relying on random chance for beneficial mutations. However, conceptually both involve iterative refinement. Each gradient update is analogous to a generation of evolution: a small change that, if beneficial (reducing loss), is kept and built upon in the next iteration. Over time, just as species converge to fit their niche, the model converges to fit the training data distribution. Notably, both processes can get stuck in suboptimal solutions (evolution in local fitness peaks, and gradient descent in local minima), yet both have mechanisms (mutation diversity, or random initialization and stochasticity in training) to eventually explore new regions of the solution space.

Comparing the Two Processes: Both natural selection and gradient-based learning search through a vast high-dimensional space (the space of possible genomes or weight configurations). They gradually encode useful information from the environment or data into a persistent medium (DNA or the weight matrix) by favoring configurations that perform better. The result is that the final DNA or model weights are highly optimized representations - they are not random, but have intricate structure reflecting the problems they were "trained" to solve. We can even draw direct parallels: survival to reproduction in evolution is analogous to achieving low loss on an example in training. One can say evolution "minimizes" reproductive failure, while gradient descent minimizes prediction error. Both processes exhibit an emergent intelligence of sorts: evolution "designed" the eye without a designer, and an LLM "learns" language fluency without an explicit programmer for grammar. Furthermore, cross-disciplinary research has connected these formally. For instance, evolutionary dynamics (the replicator equation) can be framed in terms of optimizing an objective, and certain forms of natural gradient descent have been shown to closely approximate evolutionary adaptation mathematically (Conjugate Natural Selection: Fisher-Rao Natural Gradient Descent Optimally Approximates Evolutionary Dynamics and Continuous Bayesian Inference - Paper Detail Conjugate Natural Selection: Fisher-Rao Natural Gradient Descent Optimally Approximates Evolutionary Dynamics and Continuous Bayesian Inference - Paper Detail). These connections reinforce that at an abstract level, evolution and machine learning are doing the same thing: information optimization. The main differences lie in speed and mechanism: evolution takes place across generations with random variation and death as feedback, while learning happens within a single model's lifetime using direct feedback signals. Despite that, both leave behind a durable record of the "lessons learned" - DNA in one case and trained weights in the other - which brings us to how these records are used to generate complex outcomes.

3. Expressivity and Generation

Storing information is only part of the story. DNA and LLM weights are fundamentally generative. They don't just sit as databases; they actively produce complex structures and behaviors when executed (either by cellular machinery or by a forward pass in a neural network). Here the analogy between genotype (DNA) producing a phenotype and model weights producing an output becomes especially rich.

From DNA to Phenotype: A genome by itself is just a sequence of molecules, but when placed in the right environment (a living cell), it orchestrates the construction of a living organism. DNA achieves this through a regulated process of gene expression. Genes (DNA sequences) are transcribed into RNA and then translated into proteins, which then interact in unbelievably complex pathways to create cells, tissues, and organs. This developmental process can be viewed as the execution or decoding of the information in DNA. Importantly, DNA does not explicitly map out every detail of the final organism (it does not specify the exact position of every hair or the precise wiring of every neuron). Instead, the genome is more like a recipe than a blueprint - it encodes a set of rules and processes that, when carried out by the cellular "readers" of that code, result in the emergence of the final form (Over-Confident Anti-Creationists versus ... - World Scientific Publishing). For example, the genome will have instructions about forming hair follicles and producing hair, but not a literal pixel-by-pixel image of where each hair goes (Over-Confident Anti-Creationists versus ... - World Scientific Publishing). This recipe-like nature is a form of compression and generalization - by using developmental programs, a finite genome can generate a tremendously complex organism (with trillions of cells and countless features). The phenotype (observable characteristics) is thus a decompressed, expressed form of the information in the genotype. The environment and context also play a role: the same genome can lead to different outcomes in different conditions (for example, identical twins, with the same DNA, can have small differences due to environmental influences). In computational terms, the genome plus environmental inputs results in the phenotype - we might say the environment provides parameters that influence how the genetic program runs. Despite these variables, the DNA largely determines the range of possible forms and behaviors: it constrains and enables what an organism can be. A frog's DNA will never directly produce a wing or a human brain, just as a mouse genome will always produce hair (though not the exact pattern). In summary, DNA is an expressive code: through regulated gene expression and interaction, it generates the complexity of life. Its generative capacity is immense but not unbounded - it can only generate what it encodes (subject to some stochastic variation and environmental modulation).

From Weights to Outputs: LLM weights exhibit a parallel kind of expressivity. Once a model is trained (weights set), we use those weights to generate outputs - be it text, images, or decisions - via a forward pass of the neural network. Given an input or prompt, the model computes layer by layer, ultimately producing an output (for instance, the next word in a sentence, a completed image, or an action in a game). This process is effectively the model "expressing" its stored knowledge to create a result. Just as the cell reads DNA, the neural network's inference algorithm "reads" the weights in the context of the input. Notably, the model's weights do not contain a pre-written answer for every possible query; instead, the model has learned general principles that it applies to generate appropriate outputs on the fly. This is analogous to how the genome contains general developmental rules rather than a fixed drawing of the organism. We can liken the input prompt to an environmental factor or initial condition that influences the outcome. For example, the same LLM (with fixed weights) can generate an infinite variety of sentences depending on the prompt, much as the same genotype can lead to variations in phenotype depending on conditions. In the information-compression view mentioned earlier, the forward pass is a decompression of the weight-encoded knowledge guided by the input (Training Foundation Models as Data Compression: On Information, Model Weights and Copyright Law). For instance, a model might have implicitly encoded a fact during training; when asked a question that triggers that fact, the model "unpacks" that piece of knowledge to produce an answer. The generative power of modern models is extraordinary: with their compressed knowledge, they can produce coherent essays, realistic images, or complex strategies. Yet, like DNA, their generative ability has limits defined by what is encoded. An LLM cannot accurately generate information it never encountered or learned (it may try, but the result is an approximation or a hallucination). This mirrors how an organism cannot grow a completely new trait outside its genetic potential - there are constraints. Another parallel in constraints is that both systems can have failure modes in generation: an LLM can produce nonsensical output if prompted in a strange way, and a genome can produce developmental errors (such as a genetic disorder causing malformation). Both DNA and model weights rely on intricate interaction rules to generate a correct outcome (gene regulatory networks must activate genes in the right sequence; neural network layers must propagate activations in the right ranges). In both cases, small changes in initial conditions can sometimes lead to large differences in outcome, highlighting the complex mapping from stored code to generated result.

Emergence and Complexity: One of the most striking commonalities is the emergence of complexity from simplicity. A single fertilized egg cell, with its DNA, ultimately produces a complex multicellular organism - this is an emergent process where the whole is far more complex than the sum of the genetic code. Likewise, a single set of model weights can produce an entire essay or a detailed image that is far more complex than the raw parameters might suggest. In each case, the complexity comes from how the information is applied. There is a developmental or generative process (biological development or neural network computation) that amplifies and interprets the stored information to create a rich structure. This emergent property is why the analogy goes beyond surface - it is not just that DNA and weights store info, but they both translate that info into something dynamic and rich. Furthermore, both are general-purpose generative codes: DNA can make any tissue type or enzyme as needed (within the organism's life cycle), and an LLM can produce answers on almost any topic if it has been trained broadly. Each code's expression is context-sensitive: a gene might be expressed only in certain cell types or environments, similar to how certain knowledge in an LLM is activated only by relevant prompts. This context dependency was noted by the biologist Richard Dawkins: "The genome is a recipe, not a blueprint," requiring interpretation by the cellular environment (Over-Confident Anti-Creationists versus ... - World Scientific Publishing). Equivalently, the model's output is not a lookup but an interpretation of the weights conditioned on the input. Thus, DNA and LLM weights serve as generative engines, compressing knowledge and unleashing it to create complex structures (biological phenotype or answer/imagery) when given the proper conditions or prompts.

4. Mutations, Adaptations, and Fine-Tuning

No information store is static in the long run - both DNA and model weights can be modified to produce new or improved behaviors. In biology, modifications come in the form of mutations, genetic recombination, and epigenetic changes, which can lead to adaptations over generations. In AI models, we have fine-tuning, transfer learning, and iterative retraining to adapt a pre-trained model to new tasks or information. Comparing these processes reveals how both systems balance stability with adaptability, and how they handle changes while preserving core functionality.

Mutations and Biological Adaptation: A mutation is a change in the DNA sequence - it could be as small as a single base change or as large as insertion/deletion of segments. Most random mutations in a complex genome are neutral (no significant effect) or harmful (disrupting a vital function). However, on rare occasions a mutation can be beneficial, giving the organism an edge in survival or reproduction. These beneficial mutations are the raw material for adaptation. Over generations, such mutations spread (via natural selection) and the species adapts to new challenges or niches. For example, a single mutation in a bacteria might confer antibiotic resistance, dramatically changing its survival prospects. Evolution also innovates through recombination (mixing genes from parents) and gene duplication (copying a gene so one copy can mutate to a new function while the other copy maintains the original function). These mechanisms echo the idea of reusing and tweaking existing "code" - nature seldom invents entirely new genes from scratch, but rather modifies existing ones. Importantly, biological systems exhibit robustness: an organism can often tolerate certain genetic changes without losing overall viability, thanks to redundancy and buffering (for example, backup metabolic pathways, paired chromosomes, non-coding DNA that can absorb mutations, etc.). This robustness means the phenotype is not overly brittle - small genetic changes might result in no visible effect (neutral variation) or only minor changes, ensuring that organisms do not easily fall apart due to every tiny mutation. But when the environment changes significantly, having variation in the population means some individuals are pre-adapted to the new conditions. In this way, the genetic system is flexible over long time scales: it can gradually shift the population's DNA (via accumulated mutations) to better suit new environments, all while retaining the bulk of previously evolved functionality. In other words, adaptation is often incremental: tiny edits to DNA accumulate to produce, for example, a fin evolving into a limb or a change in coloration for camouflage, analogous to refining a solution.

Fine-Tuning and Model Adaptation: Trained model weights are also not a dead-end product; they can be further fine-tuned or adapted to new tasks or data. Fine-tuning in machine learning involves taking a model that was trained on one large dataset (for example, an LLM trained on general internet text) and then training it a bit more on a smaller, targeted dataset (for example, domain-specific text or instructions). This process adjusts the weights slightly to improve performance on the new domain, without having to train from scratch. In many ways, fine-tuning is analogous to mutating and selecting in a directed manner. Instead of random changes, we nudge the weights with gradient descent using the new data as guidance (so it is like induced mutations with selection for a specific goal). The result is a model that has "adapted" to a new environment - for instance, a general language model fine-tuned to be a polite conversational agent, or an image model fine-tuned to better handle medical images. This resembles how a population of organisms might remain mostly the same but pick up a new trait suited to a niche. Transfer learning is similarly analogous: one takes an existing network's weights (like reusing a gene) and repurposes them for a new task, possibly adding a few new neurons (like gene duplication and divergence). The success of transfer learning in AI underscores that building on existing solutions is more efficient than learning from scratch, just as evolution reuses genetic building blocks (for example, the same basic limb structure adapted into a wing, a fin, or a leg).

Model weights also demonstrate a kind of robustness to perturbation. While arbitrary changes to many weights would degrade performance (much as random mutations typically harm an organism), neural networks often have some redundancy. For example, large language models are usually over-parameterized, meaning there are more weights than strictly necessary to fit the training data. This over-parameterization yields redundancy that makes the model tolerant to low-precision weight representations or minor pruning. We see this in the fact that quantizing weights from 32-bit to 4-bit hardly affects model accuracy (A Comprehensive Evaluation of Quantization Strategies for Large Language Models) - effectively, many precise bits of those weights were not critical to the model's knowledge. In biological terms, this is akin to a mutation that changes a DNA base but does not change the amino acid (thanks to the redundancy of the genetic code) or does not affect the protein's function (due to structural robustness). There is also an analogue to neutral mutations: some changes to a trained model's weights result in no change in output for any input (for example, if two neurons' roles overlap, one can take over if the other is slightly altered). This suggests a degree of generalization and fault-tolerance in both systems: they do not memorize one rigid solution, they find a flexible solution that can withstand some change.

When it comes to adaptation speed, here we see a difference born of design: we can fine-tune an LLM in a matter of hours or days to incorporate new knowledge, whereas species typically require many generations to significantly adapt via natural mutations. However, this difference is partly because in ML we supply explicit gradients (like strong directed mutations) and have a clear objective, whereas nature's feedback is slower. If we draw the analogy fully, fine-tuning an AI model is like speeding up evolution under a guiding hand - akin to selective breeding or even genetic engineering in biology, where changes are introduced in a directed way to achieve a desired trait faster than random mutation would allow. In fact, humanity has practiced artificial selection (a kind of guided evolution) to rapidly adapt species (for example, domestication of plants and animals), which is conceptually similar to how we rapidly adapt models to our needs with fine-tuning.

Robustness and Generalization: Both DNA-based systems and neural networks have to generalize and remain robust in face of change. A species' DNA encodes general solutions (like a range of immune responses) to handle variable challenges; an LLM's weights encode general linguistic and factual knowledge to handle a wide range of prompts, not just the exact sentences seen in training. This generalization means that both can handle novel situations to an extent. But when truly novel challenges arise, adaptation is needed. In nature, either the existing genetic variation has to suffice (and individuals either survive or not), or over generations new variants will emerge. In AI, if an LLM encounters a completely new kind of query or a shift in language usage (for example, new slang or facts that emerged after its training data cutoff), it may produce suboptimal answers - indicating a need for updating the model. We can update the model by further training (which parallels introducing new mutations and selecting for performance on new data). Interestingly, modern AI research is exploring continual learning - training models to adapt to new data without forgetting the old - which is very much like how populations evolve to retain core capabilities while acquiring new ones. One challenge in AI is catastrophic forgetting, where learning new information can overwrite old knowledge if not managed well. Biological evolution, in contrast, usually retains successful genes while adding modifications - it rarely "forgets" a fundamentally useful capability entirely (unless it carries a cost), due to the gradual and distributed nature of genetic change. This is a point where the analogy suggests possible improvements to AI: nature manages lifelong learning across generations via mechanisms like modularity (separate genes for separate functions) and redundancy; similarly, techniques like modular networks or weight freezing during fine-tuning are used to avoid forgetting in models.

In summary, DNA mutations and AI fine-tuning both represent ways to update a knowledge store. DNA changes are random and slow but, accumulated under selection, lead to organisms that can handle new environments. Weight updates in a model are deliberate and fast, allowing the model to handle new tasks or information. Both systems balance being frozen (to preserve learned information) and plastic (to allow new information). That balance is crucial: too rigid and they cannot adapt; too changeable and they lose what they had previously learned. Evolution and modern ML have each found ways to strike this balance, resulting in systems that are robust yet evolvable. The strong analogy here is that previous knowledge forms the foundation upon which new adaptations are built - whether that knowledge is the genome of an ancestral species or a pre-trained language model on which we layer new training.

5. Cross-Disciplinary Perspectives

Viewing LLM model weights as analogous to DNA opens up insights across multiple disciplines. This analogy is not just poetic; it bridges concepts from genetics, neuroscience, information theory, and artificial intelligence, providing a richer understanding of each. Here we draw from these fields to reinforce the comparison:

Genetics & Evolutionary Biology: These fields provide the core metaphor - DNA as the hereditary information carrier shaped by evolution. The idea that genomes store compressed, optimized information about an organism's environment is well-established. As discussed, DNA is essentially an information archive of evolutionary "experiments", with natural selection as the curator. This perspective informs AI by highlighting the value of iterative improvement and selection in building complex adaptive systems. It also introduces concepts like the genotype-phenotype map - a complex mapping that could inspire how we think about the mapping from model weights (genotype) to output behavior (phenotype). Geneticists also speak in terms of information: for instance, the information content of DNA and how evolution increases it (Accumulation and maintenance of information in evolution - PubMed). This directly resonates with training a model, where information (in bits) is accumulated in weights. The comparison suggests thinking of an LLM's training process as a kind of accelerated "evolution" of the model's parameters to fit an informational niche (in this case, human language). It also raises interesting questions: could we apply concepts like genetic diversity to ensembles of models? Could we use evolutionary strategies to fine-tune or modify models in novel ways? In fact, evolutionary computation does exactly that, evolving neural network weights or architectures, which has occasionally matched or complemented gradient-based training (gradient descent | Fewer Lacunae).

Neuroscience & Cognitive Science: While our focus has been comparing DNA to model weights, it is worth noting the multi-level parallel in biology: DNA encodes the brain's initial wiring and potential, and the brain's synapses then encode an individual's learned knowledge. In AI, these two levels (genetic and learned) are collapsed into one - the model's weights are both the result of "species-level" learning (training on lots of data, analogous to evolutionary time) and are used directly for inference (like a brain using synapses to think). Neuroscience tells us that brains learn incrementally through adjustments in synaptic weights (via processes like Hebbian learning, etc.), which is conceptually akin to gradient descent. But the brain's learning is guided by signals that ultimately trace back to survival and reward (an evolutionary tuned objective), just as an AI's learning is guided by a loss function we design. The interplay between genes and brain in biology (nature vs. nurture) has a parallel in AI between pre-training (model comes with "innate" abilities from training) and fine-tuning or prompting (the model "learns" or is guided in real time by input). Thinking of an LLM as having a kind of "artificial DNA" focuses attention on the static knowledge it has inherited from training, separate from any dynamic memory it might use during a conversation. Neuroscience also offers the idea of plasticity - some parts of the brain (like the hippocampus) remain more plastic to encode new memories, whereas others are relatively fixed. This could inspire AI architectures where certain layers of a network remain plastic (updatable) for new information, while others remain fixed to preserve long-term knowledge - much like separating fine-tuning layers. In essence, the cross-talk with neuroscience encourages us to consider how and when to allow model weights to change, and how a model's "DNA" (core weights) might interact with a more ephemeral working memory.

Information Theory & Computer Science: Information theory provides a unifying language to talk about DNA and model weights. Concepts like entropy, compression, and coding efficiency apply to both genetic sequences and neural network parameters. Claude Shannon's work on information limits can be invoked to discuss how much a genome can store versus how much a model can store. We cited earlier that DNA's entropy per base is close to maximal for a 4-letter code (Significantly lower entropy estimates for natural DNA sequences - PubMed), indicating it is a near-optimal code in terms of packing information. Analogously, large neural networks approach the capacity of their parameters: if you try to stuff too much information into too few parameters, the model underfits (cannot capture all patterns). Thus there are "information capacity" considerations in model design similar to genome size vs organism complexity considerations. Moreover, the field of data compression itself has drawn parallels: one recent view explicitly treats foundation model training as data compression, arguing that model weights are essentially an encoded form of the training data (Training Foundation Models as Data Compression: On Information, Model Weights and Copyright Law). This viewpoint has even legal ramifications (for example, regarding copyright of compressed representations). By analyzing training through the lens of compression, researchers discovered relationships like an "entropy law" connecting dataset entropy, compression ratio, and model performance (Entropy Law: The Story Behind Data Compression and LLM Performance). Such insights deepen our understanding of why certain training data is more valuable - essentially, less redundant data (higher entropy) teaches the model more per token. This mirrors how diverse selective pressures in evolution (high information environments) can lead to more rapid accumulation of information in the genome. Additionally, computer science has long used evolutionary algorithms to solve problems, confirming that evolutionary principles can be profitably applied to design systems; the success of these algorithms reinforces the analogy of optimization processes between biology and AI (gradient descent | Fewer Lacunae). Finally, thinking of model weights as DNA leads to intriguing ideas like directly editing weights. In biology, technologies like CRISPR allow targeted gene edits to produce desired traits. If model weights are DNA, one could imagine a future where we edit a few weights to inject knowledge or fix a behavior in a model, without retraining it fully. Some have speculated about this possibility: "Imagine surgically updating an AI model's weights without the need for new data - that would revolutionize AI development", analogous to gene editing in DNA (dylannikol.com). This cross-disciplinary borrowing of ideas could open new paths for AI model maintenance and evolution.

Artificial Intelligence & Philosophy of Mind: Viewing LLM weights as DNA also reframes some philosophical questions. Often AI is compared to brains, raising questions of consciousness and agency. But the DNA analogy emphasizes that an LLM is more like a genetic code than a thinking brain - it is a static repository of instructions that by itself does nothing until executed. As one commentator put it, "LLMs, like DNA, do not spontaneously evolve or think for themselves; they function strictly within their programmed parameters" (dylannikol.com). This perspective can temper our expectations of what an LLM is. It suggests that an LLM is not an agent with independent goals, just as DNA is not alive on its own - rather, both need a system around them (a cell or a computational runtime) to have effects. This can be a reassuring analogy: DNA is incredibly powerful as a code, but it is not magical - it follows the laws of chemistry and information, and we can understand it. Likewise, an LLM may produce startling outputs, but underneath it is following math and encoded knowledge, not truly autonomous cognition. This viewpoint can help AI researchers and the public conceptualize AI models in more concrete, less mysterious terms, focusing on them as products of design and data rather than as emergent untethered intelligences.

Each of these perspectives reinforces the central idea that LLM model weights are fundamentally analogous to DNA. The analogy holds from the level of information encoding and compression, through the process that creates them, to the way they are utilized and modified. By synthesizing ideas from these different fields, we not only bolster the comparison, but also open avenues for innovation: evolutionary biology can inspire new machine learning techniques, and vice versa, insights from AI might offer new metaphors for understanding life's code.

Conclusion

The comparison of LLM weights to DNA is far more than a casual metaphor - it is a deep analogy that highlights common principles of information storage, optimization, and generation in artificial and natural systems. Both DNA and model weights act as compacted knowledge stores encoding an immense amount of structured information in a relatively small space. Both are products of an iterative search for solutions - natural selection refining genomes and gradient descent training neural networks - resulting in encoded solutions that reflect the structure of the environment or data they were exposed to. Both serve as generative blueprints (or recipes) that, given the right conditions (a cellular environment or an input prompt), can produce astonishingly complex and diverse outcomes. And importantly, both are adaptable: they can change and be optimized further, through mutation and selection or fine-tuning and retraining, allowing them to meet new demands while preserving accumulated knowledge.

Exploring this analogy has not only clarified how LLMs mirror a fundamental aspect of biology, but it also provides a vocabulary to discuss machine learning in terms of life-like processes. It encourages thinking of training as "evolution in weight-space," of prompts as "environmental cues," and of model improvements as "artificial mutations." Such cross-pollination of ideas can be powerful. It guides us to consider robustness and generalization (hallmarks of evolved systems) when designing AI, and conversely to use the mathematical tools of learning theory to better quantify evolutionary dynamics. The analogy even stretches to philosophical implications about what it means for something to "know" or to "create" - in both cases, a static code (be it A, C, G, T or millions of neural weights) can give rise to dynamic behavior that appears intelligent or purposeful.

Of course, there are limits to the analogy. DNA operates within the context of biochemistry and reproduces itself, whereas model weights do not self-replicate (we, the engineers, replicate or modify them). Evolution lacks foresight, while AI training is goal-directed towards a defined loss. And organisms have an additional layer of learned behavior on top of their DNA, whereas an AI's "DNA" and "brain" are essentially the same thing (the weights). Despite these differences, the fundamental parallel remains illuminating. Both systems underline the power of compressing experience into a static form that can later be unfolded to produce complexity.

In summary, LLM model weights function in a manner fundamentally analogous to DNA: both are lore of the past, distilled into a form that can generate the future. By studying one, we can gain insights into the other. This rigorous exploration shows that what might have seemed a mere metaphor is grounded in concrete similarities. Such interdisciplinary understanding not only enriches our appreciation of both biology and AI, but also inspires us to harness the principles of one field to advance the other - truly a "central dogma" of information that transcends the boundary between carbon and silicon life.

References: The concepts and comparisons made in this essay are supported by research and expert insights. For instance, the human genome's size and information content (700 MB for 3 billion base pairs) are documented in genome research archives ([Nakamura Research Group Home Page](https://www.maebashi-it.ac.jp/knakamura/research_e.html)). The idea of model training as data compression, with weights as the compressed representation, is discussed in recent information theory approaches to AI (Training Foundation Models as Data Compression: On Information, Model Weights and Copyright Law). The accumulation of information in genomes through natural selection has been quantified by population geneticists (Accumulation and maintenance of information in evolution - PubMed), while analogies between evolution and hill-climbing optimization are noted in both academic and popular analyses (gradient descent | Fewer Lacunae). The "recipe, not blueprint" view of the genome, articulated by Dawkins and others, emphasizes how generative interpretation is needed for both DNA and AI models (Over-Confident Anti-Creationists versus ... - World Scientific Publishing). Robustness of neural networks to weight quantization highlights the compressibility and redundancy of learned weights (A Comprehensive Evaluation of Quantization Strategies for Large Language Models), analogous to redundancies in genetic code. These and other sources (Entropy Law: The Story Behind Data Compression and LLM Performance) (dylannikol.com) underpin the arguments made, demonstrating that the DNA-LLM analogy is firmly rooted in scientific understanding across domains.